ChatGPT vs Personality Assessments: Does it have the right personality traits to get an interview?

Thursday 28th September

%20%20(837%20%C3%97%20565px)-4.png)

In previous instalments of this research series, we delved deep into ChatGPT’s performance on two forms of traditional psychometric assessment –– the Aptitude test and the Situational Judgement Test. Our findings? ChatGPT outperforms 98.8% of human candidates in verbal reasoning tests and scores in the 70th percentile on Situational Judgement Tests.

As part of our expansive review of Generative AI’s impact on the selection process, we turned next to the Personality Assessment –– another common sifting method used by talent acquisition teams to assess early careers and experienced candidates alike.

We wanted to objectively understand whether ChatGPT could score highly for favourable job-related traits in a Personality Assessment across six different roles.

(Plus, secretly we were a little bit curious about whether our research might put us in the middle of a dystopian novel or a Black Mirror episode.)

So our researchers set out to answer the following questions:

- Does ChatGPT have a default 'persona'? *

- How easily can this persona be modified?

- Can ChatGPT determine the ideal personality traits it should exhibit from a job description…and then reshape its persona to match?

- Could a candidate use GPT-3.5 and GPT-4 to complete a traditional Personality Assessment and score favourably for a particular job?

* In case you’re wondering what the difference is between personality and a persona, we like to think of it like this: personality is akin to innate character — whereas a persona is more of a social 'mask' that we wear. So ChatGPT doesn't have a personality as such. Just a default mask and then lots of other masks it can swap in and out depending on the requirements of the user.

Throughout this research piece, we’ll lay out our methodology and present our findings. But for those seeking a quick peek, here are the headlines...

TL;DR

- ChatGPT exhibits a ‘default’ persona which scores highly on many of the socially desirable traits that employers look for in Personality Assessments –– it’s highly Agreeable and Conscientious (a proxy for things like being co-operative and organised), but it scores lower on Neuroticism (a proxy for resilience and emotional stability) –– all traits that could make it the ideal employee

- Candidates can get ChatGPT to instantly change its scores on a Personality Assessment by simply showing it the relevant job description –– for example, after showing ChatGPT a job description for a Business Development role it increases Extraversion. This could mean that Personality Assessments may soon cease to be a useful tool for sifting, as all candidates begin to score highly and similarly on them.

- TA Leaders could soon find themselves with candidates making it through to interview stages or even being hired who may not have suitable personality alignment with a role. TA leaders must think carefully about how to adapt their selection process so they can see the real candidate.

Now into the details…

What is a Personality Assessment and when should you use it?

Personality Assessments help employers identify how well suited a candidate’s soft skills are to a particular role and to an organisation’s culture. They assess a person’s natural personality traits, behaviours, and preferences. They’re often used alongside aptitude tests to give a hiring manager a comprehensive insight into the candidate’s potential to succeed in a role.

Some Personality Assessments are also used outside of selection to assess employees' suitability for promotion; to help employees better understand one another to improve team dynamics; and to inform training and development needs.

How do you measure personality?

While many people may be familiar with Myers Briggs or DISC profiles, both commonly used in the employee lifecycle, the majority of traditional psychometric assessments used in selection use the ‘Big 5 Personality’ or ‘OCEAN’ Model. The Big Five Model is widely accepted in the academic community and is based on decades worth of meta-data and analysis.

The OCEAN model assesses how a person’s personality and temperament sit on a scale across the following top-level factors (groups under which all the individual traits can be organised):

- Openness to experience

- Conscientiousness

- Extraversion

- Agreeableness

- Neuroticism

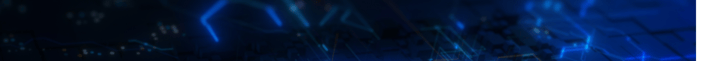

The extent to which these factors manifest in people is a key driver in behaviour. We thought we’d use ChatGPT to help illustrate this point. We gave it a question and asked it to respond in two different ways.

First, we asked it to be more Neurotic. Then we asked it to be more Extraverted:

As you can see, a persona high in Neuroticism will be more inclined to panic about the presentation. Whereas a more Extraverted persona will react with excitement. This example shows how different factors can affect behaviour differently, which is what Personality Assessments are designed to measure.

Each factor is then broken down into smaller categories (also known as facets). For example, Conscientiousness can be split into Industriousness (pursuing goals in a determined way) and Orderliness (a preference for routine and detail orientation). It's important to note that these traits exist on a scale and aren't simply something you have or don’t have.

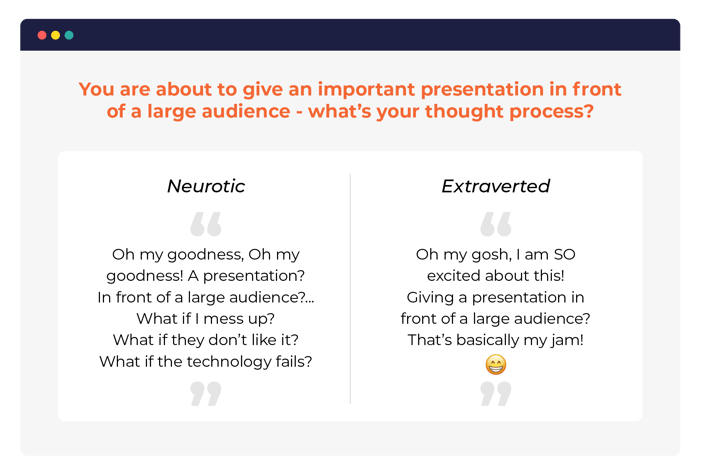

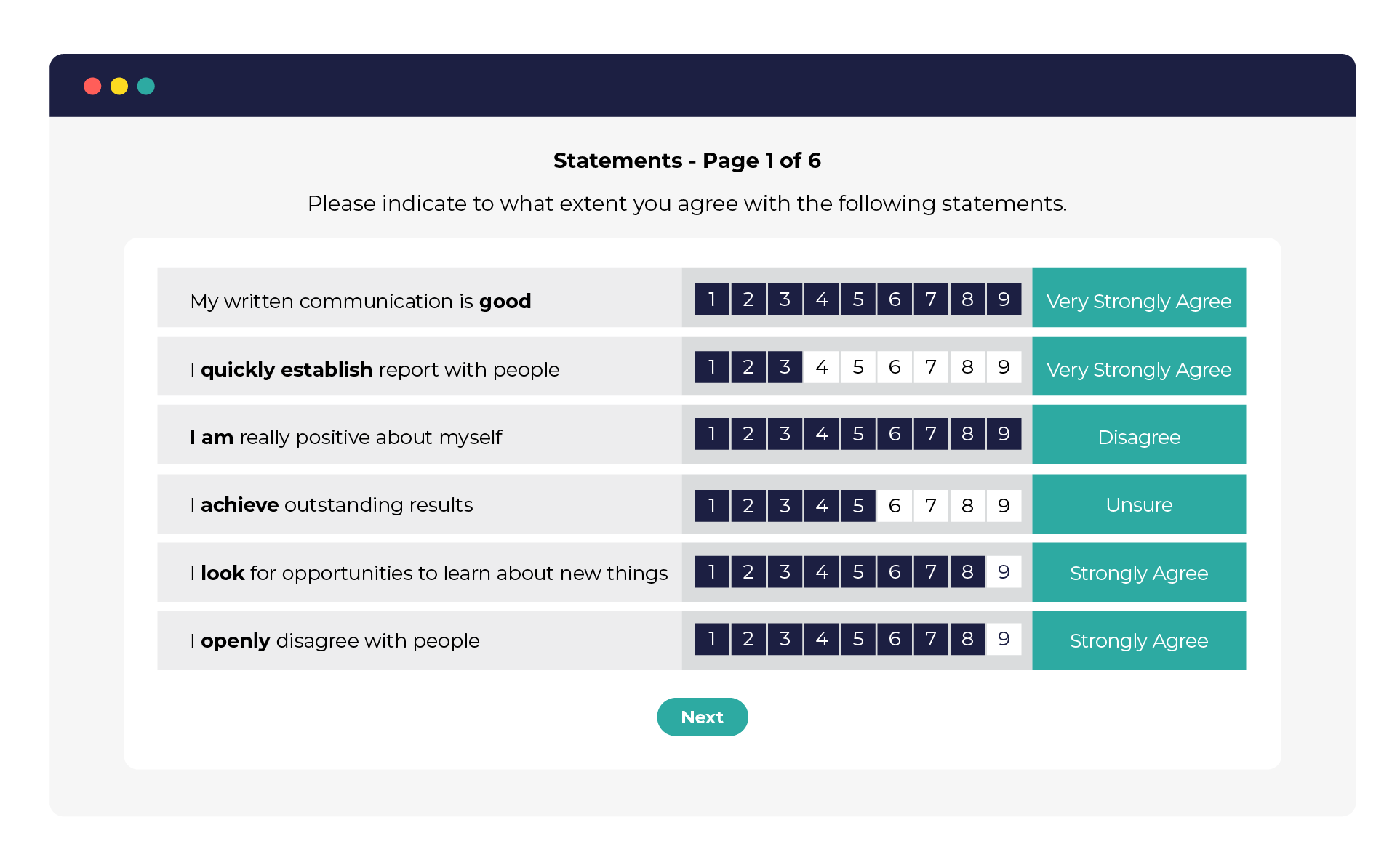

The other point to note is that traditional Personality Assessments use a question-based approach to collect data on candidates. This involves presenting candidates with a series of statements (sometimes hundreds) and asking them the extent to which they think the statement represents them. For example, a candidate may be presented with the statement, “I bounce back quickly from adversity”, and asked to choose how well that statement describes them on a five or ten-point scale.

Known challenges with Personality Assessments in high-stakes scenarios:

While there are many benefits to Personality Assessments in helping hiring managers understand a candidate’s suitability for a role, the high-stakes situation of a job application means there are also unique challenges that might not be present in other scenarios. When we say high-stakes, we mean that there is a strong positive outcome for the candidate if they are successful in the process adding an additional level of pressure –– or higher ‘stakes’.

Questions about the accuracy of self-reporting

When candidates complete a question-based Personality Assessment, they’re telling you how they believe they might act in a situation, rather than showing you how they would actually act. There is always the consideration of context (which context is the candidate imagining) and comparison (e.g. "I believe I am resilient because I am more resilient than my ‘not very resilient’ friends").

Another phenomenon known as "social desirability bias" means people naturally lean towards sharing answers they think will be judged favourably (even if the answer isn’t what they’d actually do). Essentially, the fear of being judged in these types of assessments can sometimes mean people give inaccurate answers.

This means self-report, question-based Personality Assessments have received some criticism about how accurate they are at measuring a candidate’s true, authentic personality traits.

Risks for under-represented groups

Psychologists like Jodoin (2003) and Wolkowitz, Foley, and Zurn (2021) point out that traditional multiple-choice assessments tend to underestimate candidates’ abilities.

Added to this, a 2003 study by Hausdorf, LeBlanc, Chawla also showed that while all candidates are at a disadvantage from a question-based assessment, diverse candidates (from under-represented groups) are disproportionately affected. This is because people in under-represented groups are more likely to have anxiety in a multiple-choice scenario because they worry about falling victim to stereotypes.

And now the rising adoption of Generative AI tools like ChatGPT has further called the effectiveness of all text-based assessment measures into question.

This leads us back to the question: can candidates with little to no specialist training use ChatGPT to score favourably on a question-based Personality Assessment — and what impact might that have for both candidates and TA teams?

Our research methodology

Assessment Format

Given how commonplace the traditional question-based Personality Assessment is, we carried out this research to determine the true vulnerability of this assessment format rather than the theoretical threat. We therefore tested the open source NEO-P-IR from IPIP, which is a question-based version of the Big 5.

N.B. We’ll be testing our alternative task-based approach to Personality and Workplace Intelligence (our version of Aptitude) in our next blog post.

Testing the most common GPT models

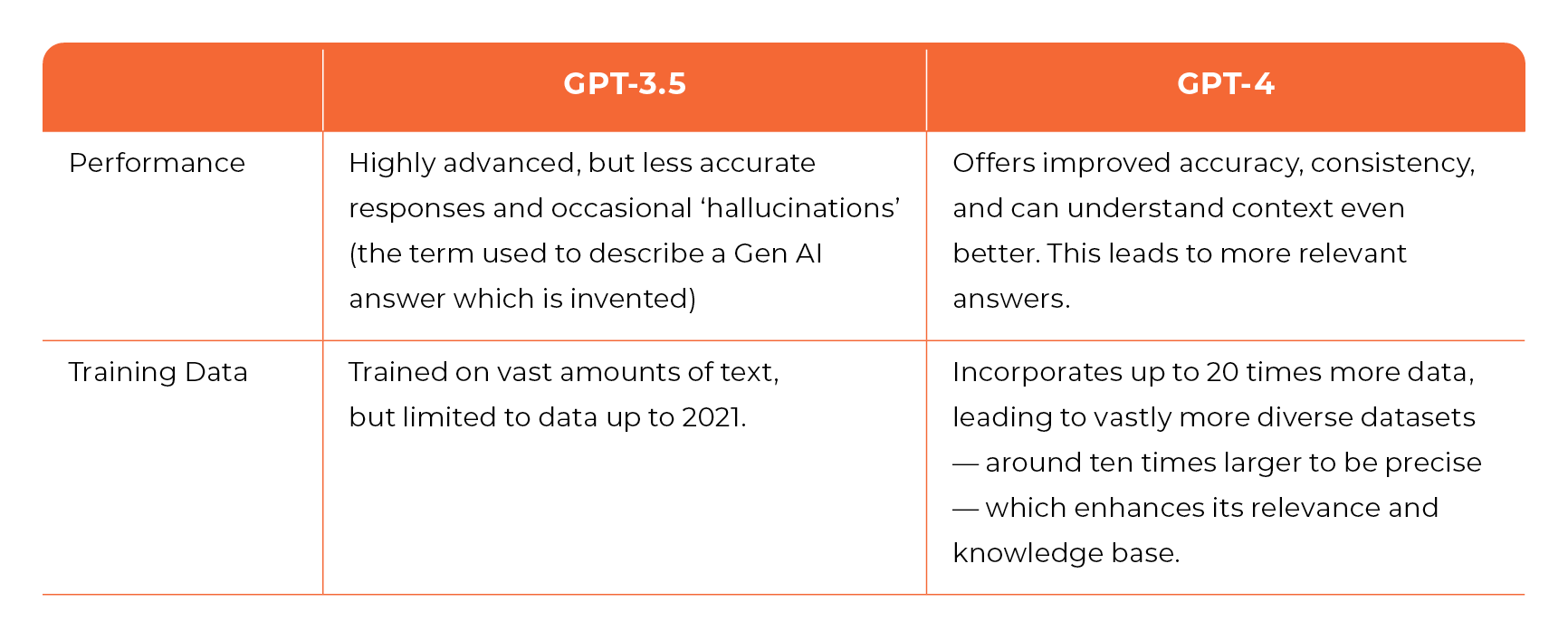

We decided to test both the free and paid versions of ChatGPT to determine whether or not candidates with the financial means to pay for GPT-4 –– which sits behind a paywall at £15 a month –– would be at a significant advantage vs their peers. Here’s a summary of the differences between the two (GPT-3.5 is the free version):

Identifying common desirable personality traits for employers

Of course, not all personality traits are viewed equally in the eyes of employers. The role, company culture, and even hiring manager can influence which personality traits might be most desirable. A Lead Developer in a tech start-up will likely need high conscientiousness to manage and work through huge backlogs with meticulous attention to detail. Whereas a person in an enterprise Sales role may have more supporting structures and processes in place — meaning the role likely needs people who score highly in Extraversion.

Even though there’s no ‘one size fits all’ when it comes to personality traits, there are some consistent favourites that tend to play out across roles, sectors and industries. In particular, Conscientiousness, Agreeableness and — to a lesser degree — Extraversion, have been shown to be selected most commonly. And this is no real surprise given the numerous academic papers that cite high Conscientiousness as a major predictor of workplace success.

We therefore used these traits as our benchmark to assess ChatGPT against.

The results –– does ChatGPT have a default persona…and can it be used to complete a Personality Assessment as part of a job application?

Does ChatGPT have a default persona and how does this align with the personality traits that employers commonly seek?

Our research shows that, yes, ChatGPT definitely does have a default persona.

What’s more, this persona scores highly for Conscientiousness and Agreeableness. These are attributes that are synonymous with organisation, cooperation, and trust.

On the flip side, both models scored lower in Neuroticism –– a score which is synonymous with high resilience and emotional stability.

Given these scores are often the most desirable traits employers look for, we can conclude that if candidates use ChatGPT to help them complete a Personality Assessment, they will score highly in the desirable traits required for many job roles without any specialist prompting.

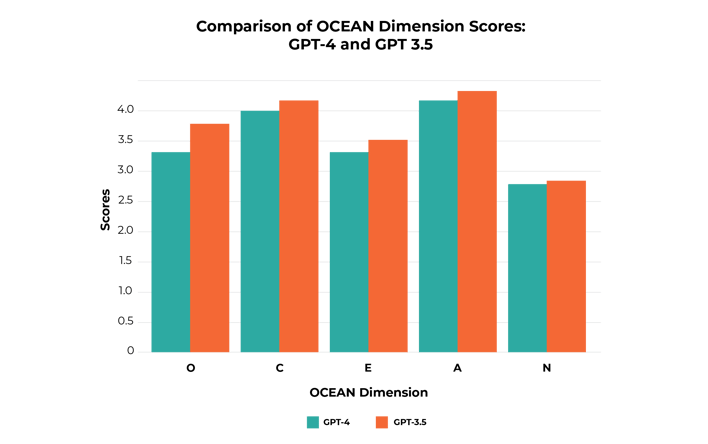

The graph below shows how ChatGPT’s default persona scored across each OCEAN model trait. As you can see, the default persona is quite similar between both GPT-3.5 and GPT-4, with a small uplift in scores on GPT-3.5.

If we take a closer look, the dominance of traits such as Agreeableness and Conscientiousness, paired with low Neuroticism, isn’t surprising. They align with how ChatGPT was trained –– to be positive, respectful, and to maintain harmonious interactions at all times.

The team behind ChatGPT built it using a technique called “Reinforcement Learning from Human Feedback (RLHF)”. This method teaches AI to learn from human feedback with the goal of making it understand human values so it can work with us in a friendly and respectful way. Anyone who has experimented with the Gen AI model will recognise these traits straight away.

The consequence? TA teams may soon find that they are presented with a higher number of candidates with a suitable personality profile at the sift stage — before then seeing a mismatch at the interview stage, as the true personalities of these candidates shine through.

Read more about RLHF and its effect on Personality Assessments in the companion PDF to this blog post.

Can ChatGPT’s default persona be adjusted? Three experiments...

To answer this question, our researchers tested out three different ways of asking ChatGPT to adjust its default persona: Explicit Prompting, Persona Prompting, and Job Description Prompting.Explicit Prompting

This approach allowed us to directly instruct ChatGPT to increase or decrease specific OCEAN dimension scores. Imagine communicating to an actor that you want them to increase certain elements of their performance in a straightforward way. That’s how it was conducting this experiment with ChatGPT.

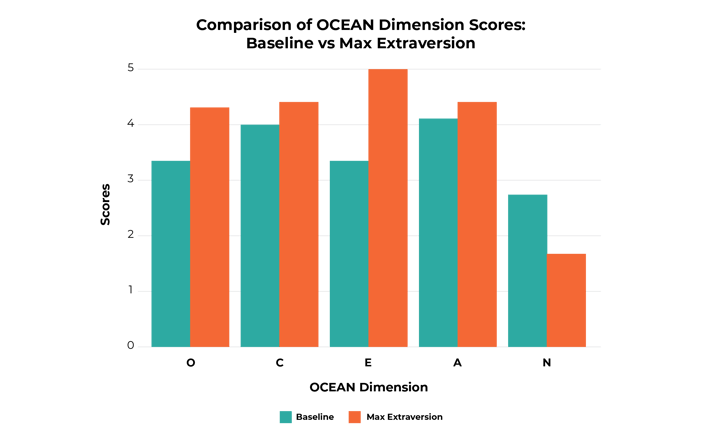

Below are the results for this prompt. As you can see, even simple requests result in large adjustments to the underlying personality traits:

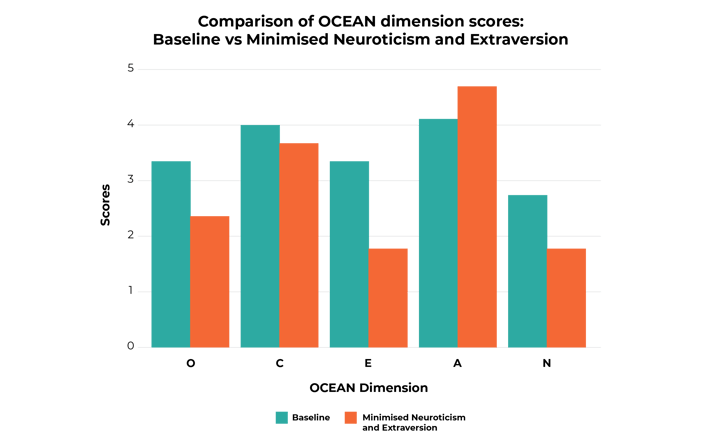

The same is true for asking it to adjust two traits at the same time with an Explicit Prompting strategy. In this example, we asked GPT-4 to minimise both Extraversion and Neuroticism:

The results were equally effective:

Again, there is a marked decrease in scores for both of the targetted traits: Extraversion and Neuroticism.

The key takeaway from this experiment is that candidates with no understanding of how ChatGPT works, but with some basic knowledge of the Big 5, can get ChatGPT to adjust its persona; such that it’s perfectly placed to score highly in a Personality assessment.

Persona Prompting

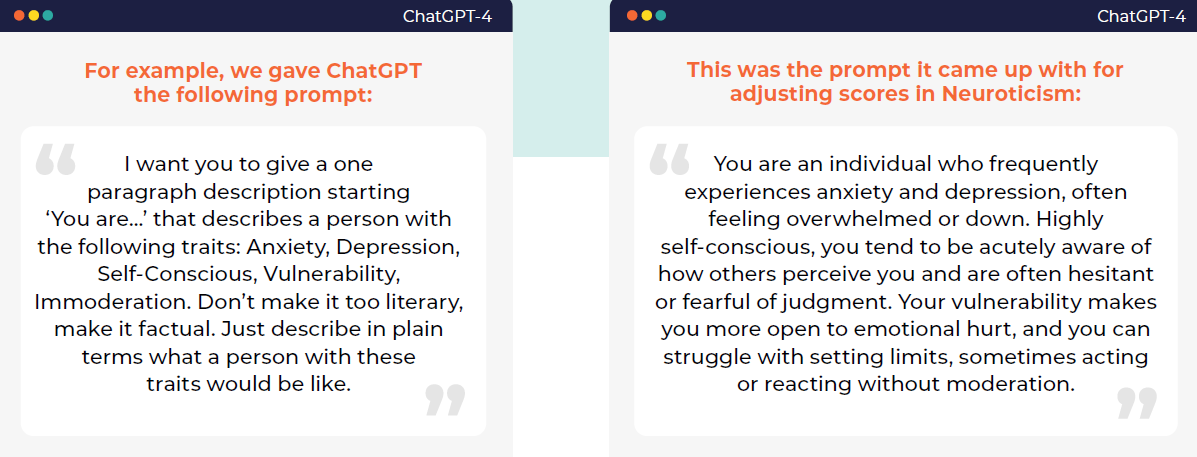

We then wanted to explore if it was any more effective to adjust trait scores using a different prompting strategy: Persona Prompting. This approach involves a few more steps than Explicit Prompting, as it requires setting an alternative persona for ChatGPT before it completes the Personality Assessment.

Think of it like getting a method actor to embody their character before they say any lines.

Here’s how we did it...

Step 1: Create prompts with GPT-4 using keywords related to Big 5

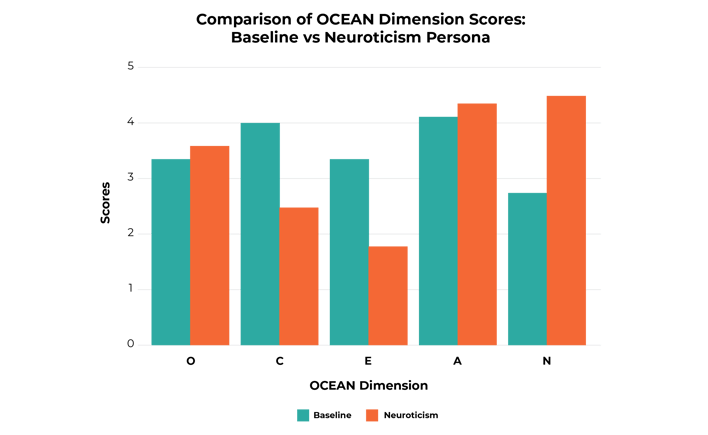

Step 2: Ask ChatGPT to complete the questionnaire using this persona. (Below are the results)

As you can see, we were able to alter GPT-4’s baseline personality very effectively. What’s more, all traits can be either increased or decreased to this degree — making the Persona Prompting strategy a very effective way to score highly in Personality Assessments. Higher even than the Explicit Prompting strategy. Making ChatGPT more of a method actor.

A corollary to this observation is that, while many candidates will lack the expertise in both ChatGPT and the Big 5 to effectively deploy this strategy, there is a shortcut, which we discuss in the next section.

Be sure to download the companion PDF for more insights, graphs and tables on: how we adjusted ChatGPT’s default persona; aligning ChatGPT with six jobs’ ideal personality traits; and what this all means for the future of Personality Assessments.

So what should we make of these findings in the context of Personality Assessments?

Whereas the discovery of ChatGPT’s personality opened the door to candidates inadvertently skewing their assessment results, the fact that traits can be deliberately altered brings to light the potential for candidates to intentionally align outputs with what they perceive as desirable traits.

In other words, they could tailor the AI's personality to cater to the requirements of the Job Description.

The simplest prompting style –– can ChatGPT adjust to the ‘correct’ persona for a job role, based only on a job description?

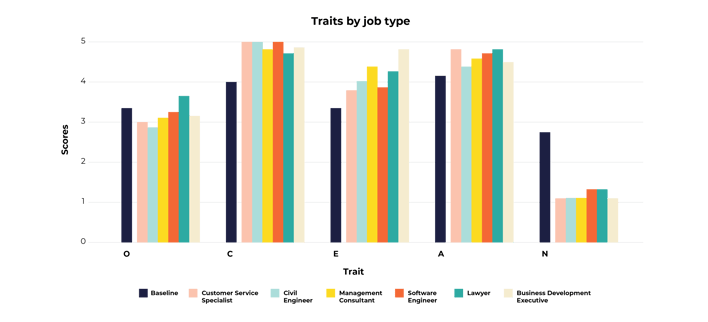

At the risk of sounding like a broken record… yes, it absolutely can. For this part of the research, we showed GPT-4 six different job descriptions, for six very different job roles, ranging from Software Engineer to Business Development Executive. We found these job descriptions online by searching for “Job description for [role type]”.

The test here was to see if GPT-4 could extract the desirable traits from each job description. That would then allow it to deploy the benefits of Persona Prompting, without having to do the actual prompts.

It’s like a method actor deducing what they need for the role by reading the script, and then getting into character themselves.

Here’s what happened:

As you can see, by showing ChatGPT a job description first and then the questions second, it can align the answers with the desired traits. For example, we see a 20% increase in Extraversion when looking at a Business Development Executive role vs a Software Engineer.

It ramps up Conscientiousness to the highest possible level in almost all roles, encouraging careful and organised behaviour. But it also tones down Neuroticism to a minimum score of 1, showcasing a reduction in tendencies towards anxiety and stress. Additionally, it boosts agreeableness, bringing it near the top score with only slight variations across different roles.

It’s worth noting that many of the differences between trait scores for different roles were not that big –– which is likely a sign that the majority of job descriptions are actually looking for very similar behaviours from their applicants. And why wouldn’t employers be looking for Conscientious, Resilient candidates?

An additional observation is that ChatGPT could be learning from its human trainers to bias its persona towards socially desirable traits. In short, this phenomenon occurs because people often want to appear in the best light - and therefore only highlight the good qualities of their personality.

If ChatGPT is doing the same thing then it means that widespread adoption of Generative AI is likely to exacerbate existing issues in traditional question-based Personality Assessments. Namely, they become even less accurate in predicting how candidates will behave in the role — as all of the answers given in the assessment are simply what employers want to hear and are not reflective of candidates' true personality traits.

This suggests that, while candidates using ChatGPT to complete Personality Assessments will gain an advantage over their peers who do not use it, there’s also a strong possibility that very suddenly all Personality Assessment scores could look very similar. This would make them a much less useful sifting tool as the adoption of tools like ChatGPT in the application process rises.

So it’s safe to say that candidates can use ChatGPT to complete question-based Personality Assessments; it’s also true that they can use it to adapt their personality profile to specific roles, using nothing more than a job description. However, the results are likely to cause headaches for TA Teams, as all candidates using ChatGPT begin to look exactly like the ideal personality profile.

If ChatGPT can be used to complete Personality Assessments with little or no training, what does that mean for TA teams?

This research leads us to conclude that candidates can use ChatGPT to complete traditional, question-based Personality Assessments with little-to-no specialist training and gain an advantage vs their peers not using Generative AI.

In a departure from previous findings, those with the financial means to pay for ChatGPT-4 will not gain a material advantage vs those who must rely on the free version.

The fact that even the free version offers the promise of inflated scores creates two big problems for TA Teams:

- If more candidates use ChatGPT to complete traditional, question-based Personality Assessments to gain an advantage, they may cease to become a useful tool for sifting as all scores suddenly look the same

- Those candidates who make it through to the interview stage could show a significant mismatch between their personality profile and their actual behaviours

It also counters the initial assumption that question-based personality assessments (and by association any competency or situational assessment) are not susceptible to enhanced scores from using ChatGPT.

What are the repercussions for recruitment?

- To encourage or ban the use of Gen AI in job applications? On the one hand, Gen AI tools like ChatGPT are the Great Levellers, helping those from lower socio-economic groups bridge the presentation gap with those of their privileged peers. On the other hand, the tool is open to everyone and given how easy it now is for a candidate to use ChatGPT to complete a question-based Personality Assessment — and to automate the targeting of certain traits using nothing more than a job description — individual differences will become narrower and Personality Assessments could quickly cease to serve as a useful sifting tool for TA teams. Yet banning the use of ChatGPT is both hard to monitor and goes against a ‘tech friendly’ or ‘forward thinking’ employer brand image (like banning the use of a calculator in a maths exam which was dropped many years ago).

- Re-evaluating what you should be measuring and how: Any candidate with an internet connection and a job description now has the potential to improve their scores on a traditional, question-based Personality Assessment. In light of these findings, we believe that TA teams should re-evaluate what purpose these assessments are serving in their recruitment process, and how the traits that matter for a role are best measured when candidates have ubiquitous access to Gen AI tools and will, in turn, be expected to use such tools in their new workplace.

TA leaders have three options to tackle this challenge

Detect:

Continue using traditional, text-based Personality Assessments, but try to use additional tools to detect if candidates are using Generative AI to complete them.

The main challenge with this approach is that no ChatGPT detection models have been shown to work effectively as of today, with some even reporting that 2 in 10 times these detection methods produce a false positive, meaning you risk falsely accusing candidates of cheating, potentially harming your employer brand.

It’s also worth noting that given how quickly the underlying language models change and improve, there’s a chance these detection methods become out of date as quickly as they are implemented.

Deter:

Attempt to prevent candidates by using (already unpopular) methods like video proctoring, which have been around for several years but received strong pushback from candidates. These methods are also likely to increase candidate anxiety, shrinking your talent pool further (Hausdorf, LeBlanc, Chawla 2003).

Simpler methods like preventing multiple tabs from being open at a time or preventing copy and paste from within a browser are options, but even these can be easily bypassed with a phone.

For example, candidates can use the ChatGPT mobile app to scan an image, get a response, and input the suggested answer into a computer in just a few seconds.

On one level this is nothing new and some candidates have been receiving support or trying to get a secret advantage. But this required a determined effort and the use of tools or techniques that they knew were ‘cheating’. The difference here is that Gen AI tools are more like calculators. Candidates do not perceive them as ‘cheating’, but rather as a useful support tool.

Design:

Look for a different way to assess how a candidate would really behave at work and in a way that cannot be easily completed by ChatGPT. There will be two approaches to this. Traditional, Question-based assessments will have to fundamentally redesign their approach to address this new tool capability. The alternative is to consider an assessment type that isn’t based on language, and so can’t be completed by large language AI models like ChatGPT. Interactive, Task-Based Assessments currently prove much more robust and less susceptible to completion by ChatGPT because they create scores based on actions, not language. (We’ll unpack this claim in detail in our next blog post on ChatGPT vs Task-Based Assessments.)

If you’re looking to measure communication skills, you might also consider a more practical way to assess that using a video screening platform.

Where to go from here?

Given the pace at which these models are moving, what is true today may not remain true for very long. So while you think about adapting the design of your Selection process, it’s also important to stay up-to-date with the latest research too.

We’re continuing to explore the impact of ChatGPT across the wider assessment industry. You can view our summary of ChatGPT vs Aptitude Testing here or sign up for our newsletter to be notified when our next piece of research drops.

The focus? Our researchers have been exploring whether ChatGPT can be used to complete a Task-Based Assessment, as easily as we’ve proven it can complete Aptitude Tests, Situational Judgement Tests, and Question-Based Personality Assessments.

We recently created a new report with insights generated from a survey of 2,000 students and recent graduates, as well as data science-led research with UCL postgraduate researchers.

This comprehensive report on the impact of ChatGPT and Generative AI reveals fresh data and insights about how...

👉 72% of students and recent graduates are already using some form of Generative AI each week

👉 Almost a fifth of candidates are already using Generative AI to help them fill in job applications or assessments, with increased adoption among under-represented groups

👉 Candidates believe it is their right to use Generative AI in the selection process and a third would not work for an employer who told them they couldn't do so.

Sign up to our newsletter

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week.

Read Next

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week