Navigating candidate use of GenAI: should you Deter, Detect, or Design selection differently?

Thursday 14th December

%20%20(837%20%C3%97%20565px)%20(5)-1.png)

The research is clear: in the next twelve months, 7 in 10 students and recent graduates plan to use Generative AI tools to complete a job application or assessment. And almost a fifth of candidates already admit to doing it.

The prolific use of Generative AI is set to make all CVs and text-based application forms look the same. Tools like AutoApplyAI already promise to submit 100s of tailored CVs and cover letters at the click of a button, meaning that for many TA teams, CVs are quickly ceasing to be helpful or scalable sifting tools.

The obvious alternative for many talent acquisition teams would be to use a psychometric assessment to measure candidates’ true personalities and abilities. But many of these are also vulnerable to Generative AI tools.

For example, ChatGPT outperforms 98.8% of human candidates on question-based Verbal Reasoning tests and scores around the average sifting threshold on a traditional Situational Judgement Test. So sifting candidates based on scores from these types of traditional psychometric tools may not be reliable either.

These vulnerabilities are already causing problems. Some TA teams are reporting a huge increase in application volumes and assessment pass rates. But then a drop in candidate quality at the later interview or assessment centre stages when candidates' true personalities and abilities are revealed.

Every organisation now faces the same sifting conundrum: we want candidates to embrace Generative AI – but in many cases, this undermines the accuracy and scalability of our current recruitment methods.

TA leaders and their teams now have three options to address this issue:

- Deter the use of GenAI

- Detect its use

- Design a new selection process that embraces GenAI while still assessing candidates accurately.

We explain the three options and their effectiveness below.

1. Deter

Deterring AI usage comes, unsurprisingly, from making candidates aware that they’ll be negatively affected if they use Generative AI in the application or selection stages.

For example, career sites have always highlighted that if an applicant is caught ‘cheating’, they’ll be removed from the selection process. Some employers are now extending the definition of ‘cheating’ to include using GenAI tools.

The difficulty with this approach is that the research shows only 13% of candidates feel that using GenAI tools in an application or assessment is dishonest. The vast majority of students don’t consider using it ‘cheating’, any more than they would using a calculator instead of relying on mental arithmetic. In fact, a third of candidates wouldn't apply for a role if they weren't permitted to use GenAI, another third would be put off, and another third would view the employer as unprogressive.

Classifying candidates using GenAI as ‘cheats’, when those same candidates do not see themselves as acting dishonestly, presents a big risk. Employers may very quickly look out of touch, damaging their brand and their ability to attract the best talent.

Other ‘hard’ deterrence techniques include preventing copying and pasting or having multiple applications open at the same time. But these are easily bypassed. (See this article and attached PDF for an example of how candidates can use the iPhone’s image-to-text feature to bypass deterrence techniques that prevent them from having multiple tabs open during an assessment.)

In contrast, some businesses are taking a softer, more transparent approach to deterring candidates – particularly when it comes to psychometric assessments.

These businesses are signposting to candidates that using Generative AI will obscure the ‘real them’ and may mean the candidate will also get a less accurate picture of whether the role is a good fit for them.

Others are choosing to coach candidates on where in the selection process GenAI will add value to their application… and where it won’t.

In all of the above examples, the hope is that candidates will be more willing to participate in the assessment without assistance from GenAI. In the highly competitive and high-stakes environment of job applications, however, this approach alone is not guaranteed to have the desired impact.

2. Detect

Detecting candidates using Generative AI means using monitoring algorithms to ‘flag’ suspect responses. The main issue with this approach is that no ChatGPT detection models have been shown to work effectively as of today.

Some sources even report that 2 in 10 times these detection methods produce a false positive, meaning you risk falsely accusing 20% of your candidates of cheating, potentially harming your employer brand (not to mention setting back diversity efforts given Black and neurodiverse candidates are more likely to use GenAI to support with their CVs than other groups).

Another detection method is online video proctoring, which has been around for some time. This involves live-streaming or recording a test-taker’s entire assessment session via a webcam.

It’s then up to either a test administrator or an AI system to closely monitor video details, examining suspicious activities during an online test or assessment.

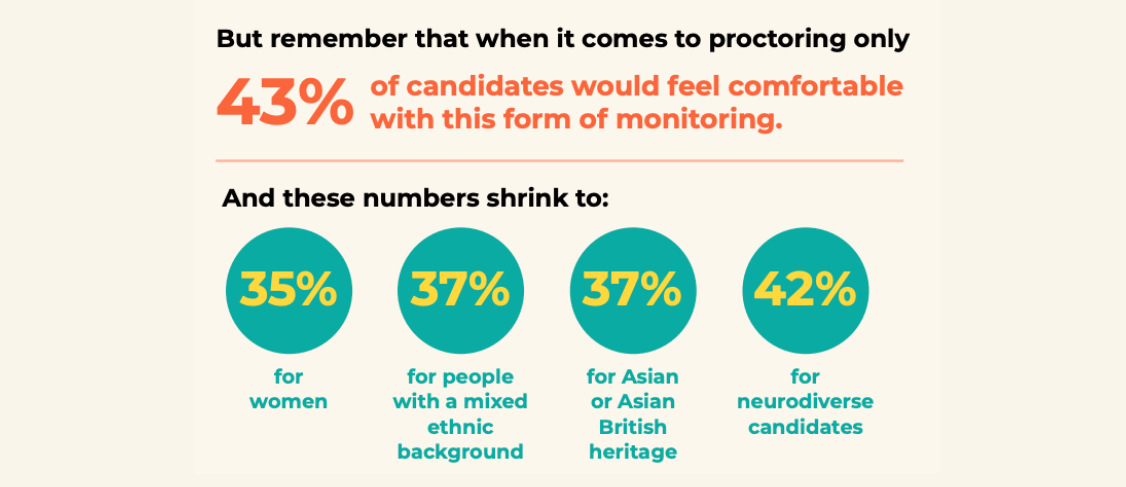

However, our recent survey of 2,000 students and recent graduates showed that Early Careers candidates are not comfortable with this type of detection:

Proctoring runs the very real risk of negatively affecting these groups disproportionately. If only 35% of the females in your candidate pool are comfortable with this method, this could undo years of progress toward meeting your diversity goals.

Five reasons why Detecting GenAI may not be effective

- No ChatGPT detection models have been shown to work effectively as of today. Some sources even report that 2 in 10 times these detection methods produce a false positive; meaning you risk falsely accusing 20% of your candidates of cheating, potentially harming your employer brand.

- Detection models will date quickly. It’s also worth noting that given how quickly the underlying language models change and improve, there’s a high chance these detection methods will become out of date.

- Prevention tools are easily circumvented. Candidates can easily use an iPhone or Android phone to scan text, feed it into the ChatGPT app, and input the suggested answer into a computer in just a few seconds. See our research on ChatGPT vs Situational Judgement Tests for examples and screenshots.

- Flagging candidates as suspicious could harm diversity. What will you do if a candidate is flagged as suspicious? If 7 in 10 candidates are willing to use ChatGPT in their application, exclusion runs the risk of reducing diversity and reach. (For example, 65% of women are uncomfortable with proctoring)

- Poor candidate experience. We have to consider the impact of harsher detection measures on the candidate experience. Existing research shows how these methods (especially online proctoring) are likely to increase candidate anxiety, blurring your view of candidates’ real abilities, and reducing the diversity of your talent pool further (Hausdorf, LeBlanc, Chawla 2003).

3. Design

If deterring and detecting Generative AI usage still leaves you vulnerable to inflated scores from ChatGPT, and also risks damaging your employer brand, then the only other option is to use different selection tools – ones that are designed to be more resistant to inflated scores from GenAI.

This approach is now the only way to:

- Accurately assess candidates’ potential to succeed in the role

- Continue progress towards diversity goals

- Protect your employer brand

When your selection tools are designed effectively, your selection process can celebrate rather than penalise GenAI usage.

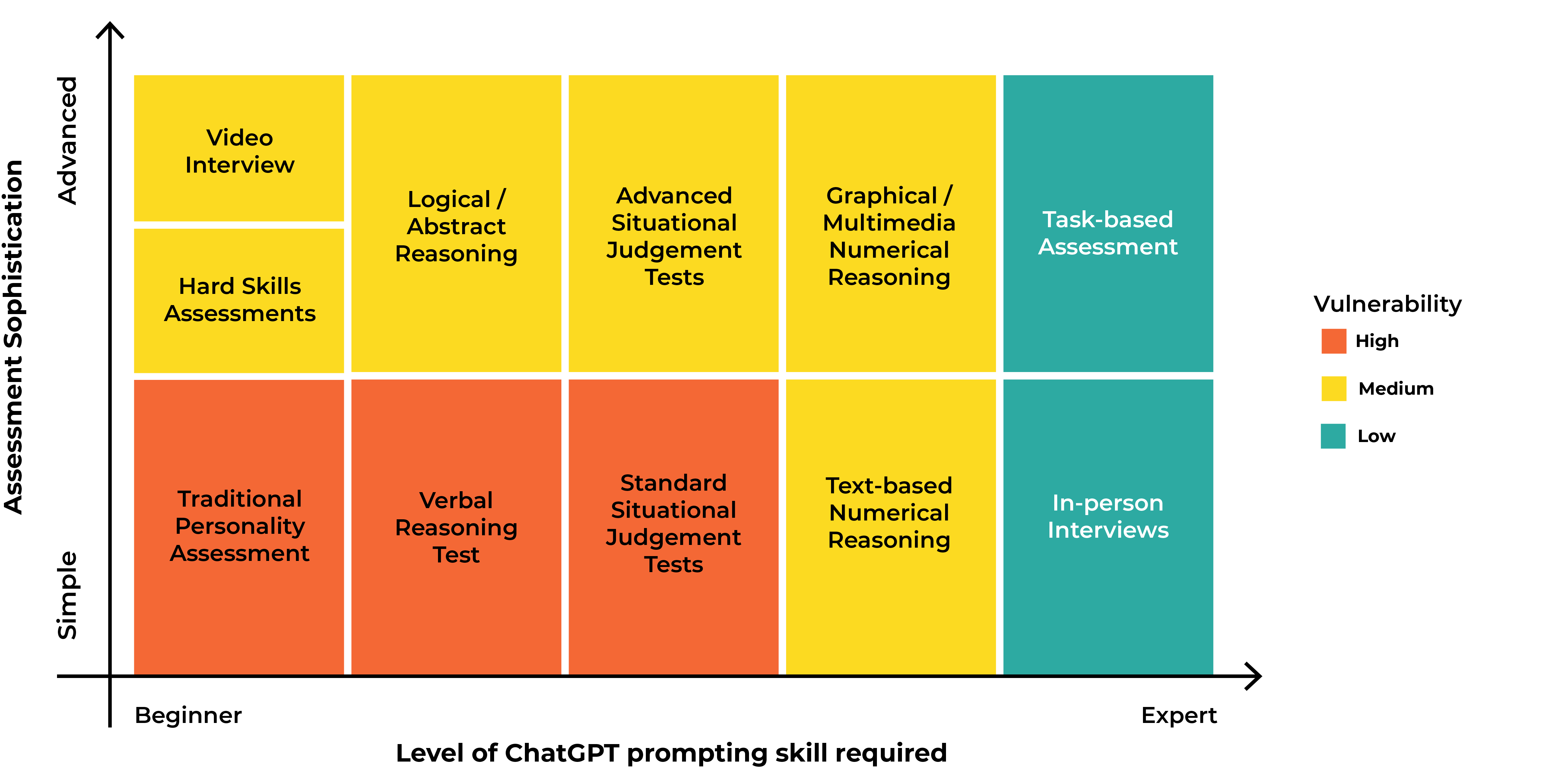

In particular, our research has revealed that TA teams should review the vulnerability of their traditional question-based assessments and either amend their assessment design to address the weaknesses (where possible) or consider a more modern assessment design that is Task-based.

The below matrix maps the vulnerability of common assessment types vs ChatGPT. This varies along two dimensions: the sophistication of the assessment method, and the level of ChatGPT prompting skill required. Most traditional text-based assessment types are highly vulnerable to Generative AI, while in-person assessments and Task-based Assessments have low vulnerability.

Why are some assessment designs less vulnerable to ChatGPT?

The Task-based Assessment is designed with three components that make it harder for candidates to inflate their scores using ChatGPT.

TA teams can either switch to a Task-based Assessment or consider looking for these elements in other assessment designs:

- They should be non-verbal: Instead of relying on language-based questions, a Task-based design is built on visual interactions that bypass ChatGPT’s linguistic prowess. We’ve tested automated ways of explaining tasks to ChatGPT, but none of these worked. Interactive, visual tasks provide a more robust defence against Generative AI.

- Don't score candidates on the final answer: Most traditional assessments score candidates solely on whether they choose the ‘preferred’ answer. But this binary nature leaves them vulnerable to Generative AI tools. The best way around this problem is to score the candidate on every step they take, which ensures you get a true picture of how they think and act – not just a picture of how well they work with ChatGPT to answer the question. Task-based Assessments use interactive and dynamic item types that require lots of continued engagement from the candidate - perfect for scoring every step the candidate takes.

- Refreshed and novel items: Generative AI tools rely on a chatbot-style input method to explain the task. Traditional assessments have just one format and style whereas Task-based Assessments have multiple styles, and within each style, a fast-changing set of requirements. It would be too time-intensive to craft the prompts each time, let alone within the time limit.

This design lets organisations accurately differentiate between candidates. And, in doing so, lets candidates show their true abilities independently of GenAI tools.

After all, the main draw of the ‘Design’ solution is allowing for a more nuanced understanding of candidates’ true selves, tapping into areas that are untouched by AI assistance, and hence, presenting a truer picture of what they’re really capable of.

The bottom line is that forward-thinking TA Teams who embrace and embed a Design approach now have an opportunity to not only survive in the age of Generative AI — but to thrive..png?width=8000&height=4500&name=AS_Deter%2c%20Detect%2c%20Redesign_A%20(1).png)

How to get started – steps TA Professionals should take to redesign their selection tools

The impact of Generative AI and tools like ChatGPT is going to be huge. Ignoring this impact is not an option.

Our research shows how broadly these tools are being used and how, with little or no training, any user can ‘ace’ traditional assessments, especially those which are language and reasoning-based. We don’t pretend to have all of the answers.

But based on the insights and observations above, there are some immediate actions that talent acquisition professionals must consider when thinking about whether to Deter, Detect, or Design their selection tools differently in response to candidates’ use of Generative AI.

Step 1

Conduct a Generative AI Vulnerability Audit.

Some TA teams are already seeing pass rates jump and candidate quality become more variable, showing that top-of-the-funnel sifting is currently most at risk of distortion. In the words of Yasar Ahmad on the TA Disruptors podcast, we need to act now to review our process to ensure that we’re climbing a hill, not a mountain.

That’s why we’ve developed a methodology that all TA teams can use to audit their existing vulnerabilities to Generative AI. Covering four core areas, the audit methodology can be adapted to suit your team, while ensuring that you’re building upon data-driven insights.

The methodology covers four areas of enquiry:

- Analysis

- Optional deep dive

- Mitigation strategies

- Continuous monitoring and improvement

Download our Generative AI Audit Template and discover which mitigation strategy is right for your team

Step 2

Create a set of internal recommendations that outline how you plan to redesign the selection process to embrace Generative AI, rather than criminalise it.

Once Step 1 above has been completed, you’ll have the information on the areas you need to address both immediately and in the long term. Depending on the results of your audit, some obvious changes could be changing your assessment design from question-based to Task-based.

You might decide to change the wording on your career site to make your position on the use of Generative AI explicit — whether welcoming it or cautiously accepting its use. Law professor Tammy Pettinato Oltz offers good advice on this.

Doing nothing is a risky choice

One thing is absolutely clear for Early Careers teams. Doing nothing and ignoring the impact of GenAI on the recruitment process is a risky choice. Over the coming months, we can expect more and more vulnerabilities to come to light.

Our recent report and its underlying research are designed to help inform what you can do and why it’s so important to do that now.

Likewise, our GenAI Vulnerability Audit gives TA Teams a methodology with which they can assess the risk inherent in their current processes — and start to decide which mitigation strategy is best for them.

Disruptive TA teams must act now to get ahead.

No matter where you now stand in your thinking, you can join us on this journey by signing up for our TA Disruptors Newsletter, where we’ll be sharing more insights and progress on the impact of Generative AI each week.

Sign up for our newsletter

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week

Read Next

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week

Sign up for our newsletter to be notified as soon as our next research piece drops.

Join over 2,000 disruptive TA leaders and get insights into the latest trends turning TA on its head in your inbox, every week