Psychometric tests

It's not easy to stay on top of everything psychometric testing, which is why we've broken it down so that it's easy to understand!

What is a Psychometric Test?

It’s all in the name. “Psycho”, or brain, and “metric” – measurement.

Psychometric tests, in all their forms, are designed to measure certain aspects of how a candidate’s brain functions and responds to various scenarios and stimuli. Depending on the type of test, they can grant employers rich data on an individual’s cognitive ability (or, intelligence), personality, behaviour and motivations. Ultimately, this data should make it easier for employers to establish how well candidates fit the requirements of a given role.

Psychometric testing is a way for employers to learn a bit more about their candidates, on top of the more traditional steps in the hiring process, like CVs or interviews. They come in all sorts of shapes and sizes, and can measure all sorts of different things.

How do Psychometric Tests Work?

Naturally, this depends on the test (and who provides it!). But, for most psychometric tests, there are four steps you’ll go through:

Onboarding phase

Before you get started with a psychometric assessment, you’ll need to decide what exactly you want to look for in your candidates. What does “good” look like in a given role, or your unique culture? To get there, you’ll often go through an onboarding process.

(Unless you use our Assessment platform, that is, where you can tell our assessment what to look for yourself.)

Typically, though, onboarding processes for psychometric testing involve workshops, interviews with high performers, desk research and job analysis (often by trained business psychologists). Honestly, this can take a little while (depending on the level of detail, and your patience).

Profile creation

The good thing about a long, bespoke onboarding process is that the output is always bespoke to you. When we say “output”, we generally mean a profile or framework, which details the traits that emerged from your onboarding as being vital for your role or culture. This is the profile that you’ll assess all candidates against.

Candidate invitation

But, before you can assess them, you need to invite them! If you’ve integrated your psychometric assessment provider with your ATS, then you can generally do this in just a few clicks.

Candidate completion

Whether you’re using a more traditional questionnaire-style psychometric test, or something more engaging (like our behaviour-based assessment), your candidates need to complete it so you can see all that insight on the other side.

Reporting

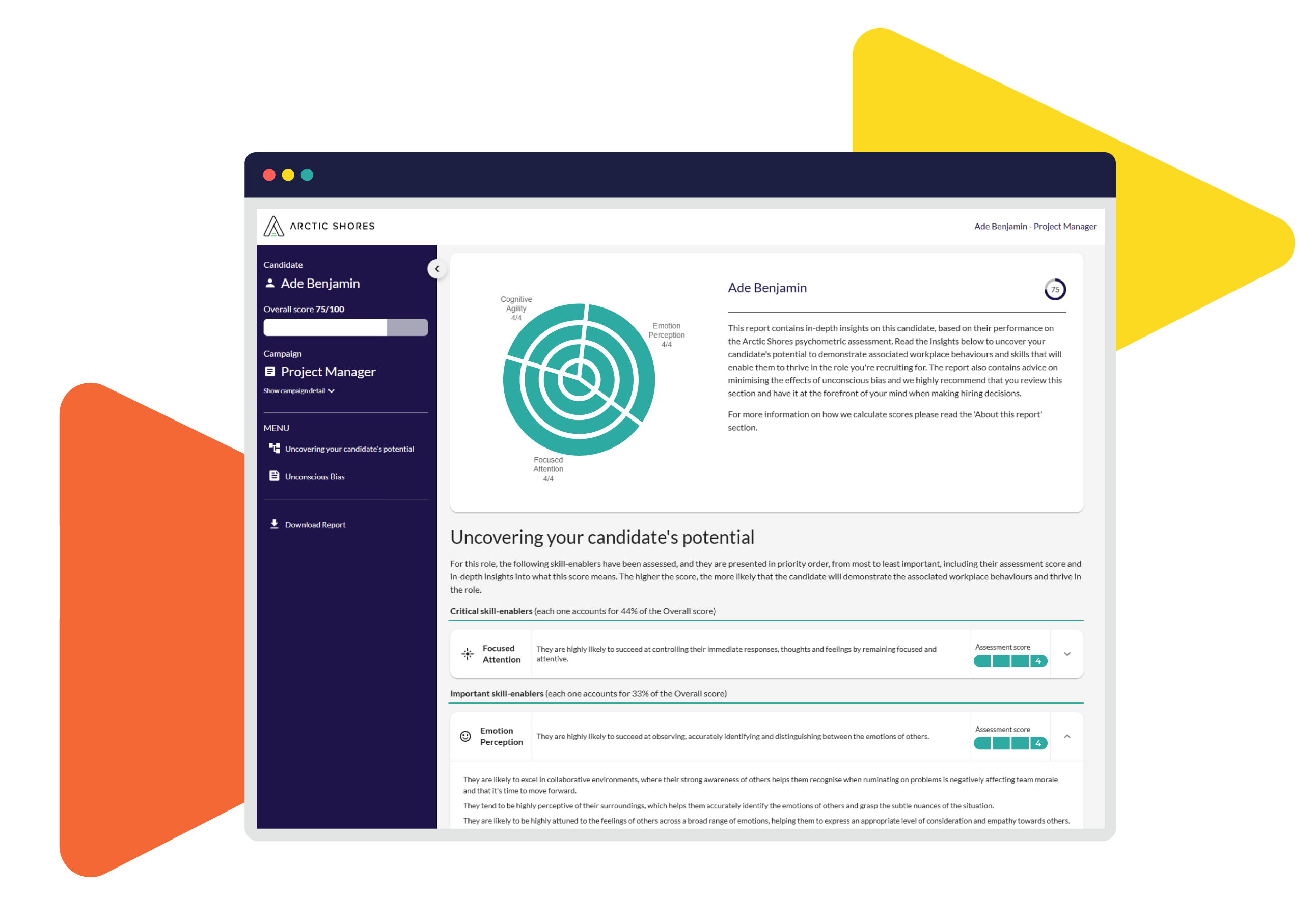

Once your candidates have completed their psychometric assessment, you’ll receive some reporting on how they stack up to that initial profile of what ‘good’ looks like for you. We can’t speak for other assessment providers. But, once a candidate completes our Task-based Assessment, we automatically send out two reports – one to you (the manager report), one to the candidate (the feedback report).

Manager report

This shows, trait-by-trait, how your candidates measured up to your profile. This helps you:

- Understand their strengths and development areas

- Make smarter screening decisions

- Prepare for an interview (we actually make this even easier, by giving you bespoke interview guides, too)

- Support onboarding (knowing your candidates’ strengths helps to speed up their time to value)

Candidate Report

With your permission, we automatically send every candidate a feedback report, to recognise the time and effort they’ve put into completing our assessment. We’ve found this helps create a rewarding experience, even if they’re eventually unsuccessful.

In their feedback reports, candidates see more of an overview of the traits they displayed in our assessment, without so much granular detail as the manager report. This gives candidates an opportunity to learn a bit more about themselves, without overwhelming them with unnecessary detail.

“So much of success is based on ambition, learning agility, culture fit, and alignment with purpose”

Types of psychometric test for recruitment

The field of psychometrics is pretty diverse, and different assessments will measure different things. Broadly, though, you can split the field into two camps: aptitude, and personality.

Aptitude tests

All aptitude tests, in their various forms, measure some aspect of ability or cognition (what we’d usually think of as intelligence). They’ll often ask questions where the answer is either right or wrong – measuring what’s known as ‘maximum performance’. As the name suggests, there’s not a lot of grey area with aptitude.

Here are some common types of aptitude test you’ll find on the market:

- Numerical reasoning: these look at how quickly and accurately someone can process numerical data. Common tasks include interpreting charts, solving numerical sequences, working with percentages or ratios, and performing currency conversion.

- Logical reasoning: Logical reasoning, aka critical or inductive reasoning, can involve text, numbers, or even just shapes. These tests measure how candidates solve problems, make connections and spot rules or patterns. For example, a candidate might be asked to read a passage of text and then choose the statement that most accurately reflects it.

- Spatial reasoning: Spatial reasoning tests assess how well someone can visualise, differentiate and manipulate 2D and 3D shapes in their mind. They can also include more specific tasks, like map-reading and route-planning. These tests are especially common in STEM fields.

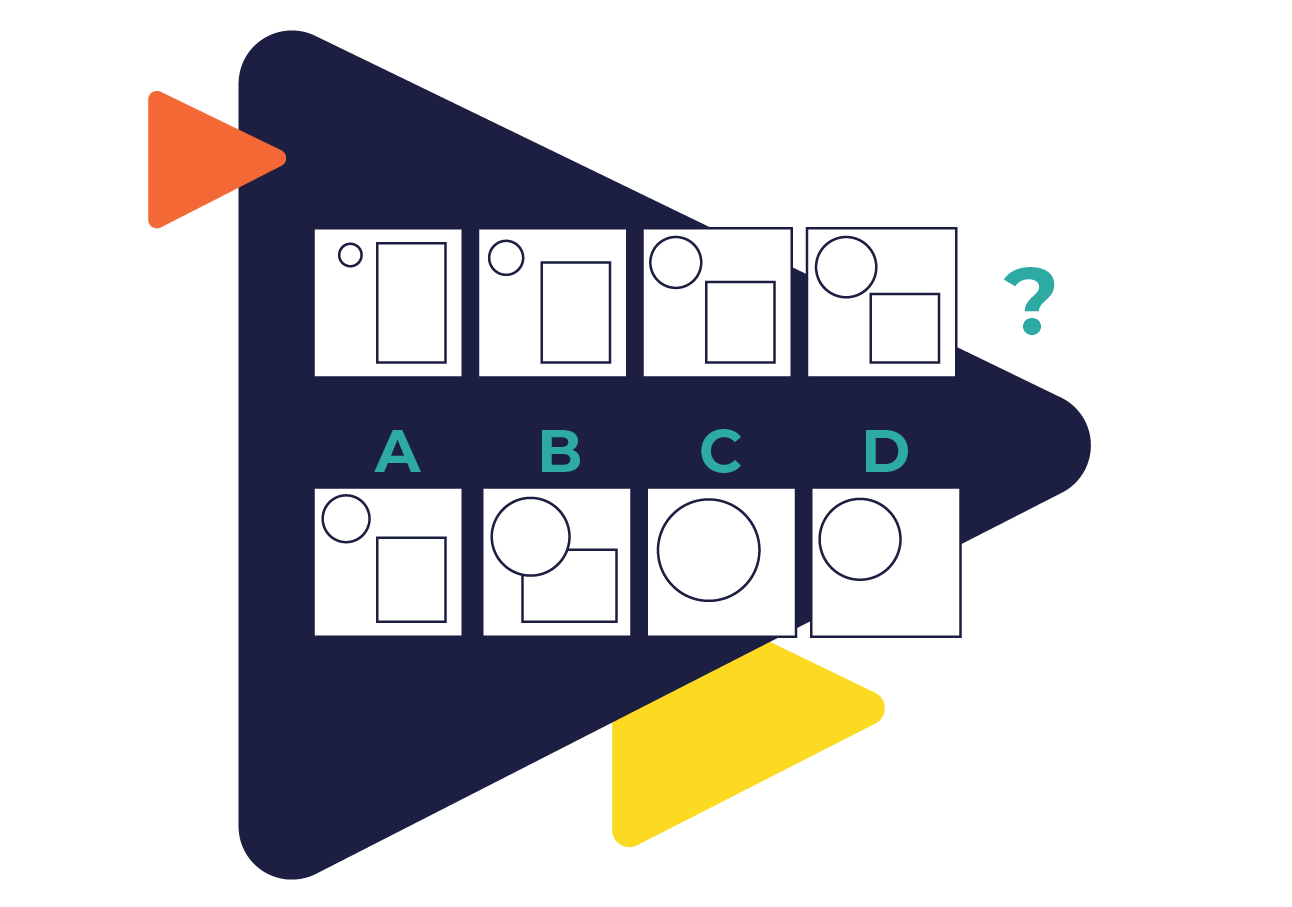

- Abstract reasoning: Like logical reasoning, abstract reasoning measures pattern-recognition. The main difference is that abstract reasoning requires no use of language or mathematics. Commonly, a candidate will be presented with a sequence of shapes or patterns, and will need to predict what comes next.

- Error checking: No prizes for guessing this one: error checking tests measure how well a candidate spots errors. That could be a mistake in a data set, a typo in a block of text, or a logical error in a hypothetical problem.

- Technical tests: A technical test will relate to a specific field, usually in STEM. It may draw on other psychometric tests, such as spatial reasoning, numerical reasoning and logical reasoning, and apply this to specific problems found in that field.

Personality assessments

Unlike aptitude tests, personality assessments don’t focus on ‘right or wrong’. Instead, they aim to give employers a clearer read on candidates’ natural behaviours, and how these will affect their fit. This could be for a specific role or, alternatively, their cultural fit.

Because there’s no real ‘right or wrong’ when we consider someone’s personality, these tend to be known as ‘assessments’ rather than ‘tests’. We can break them down into two types:

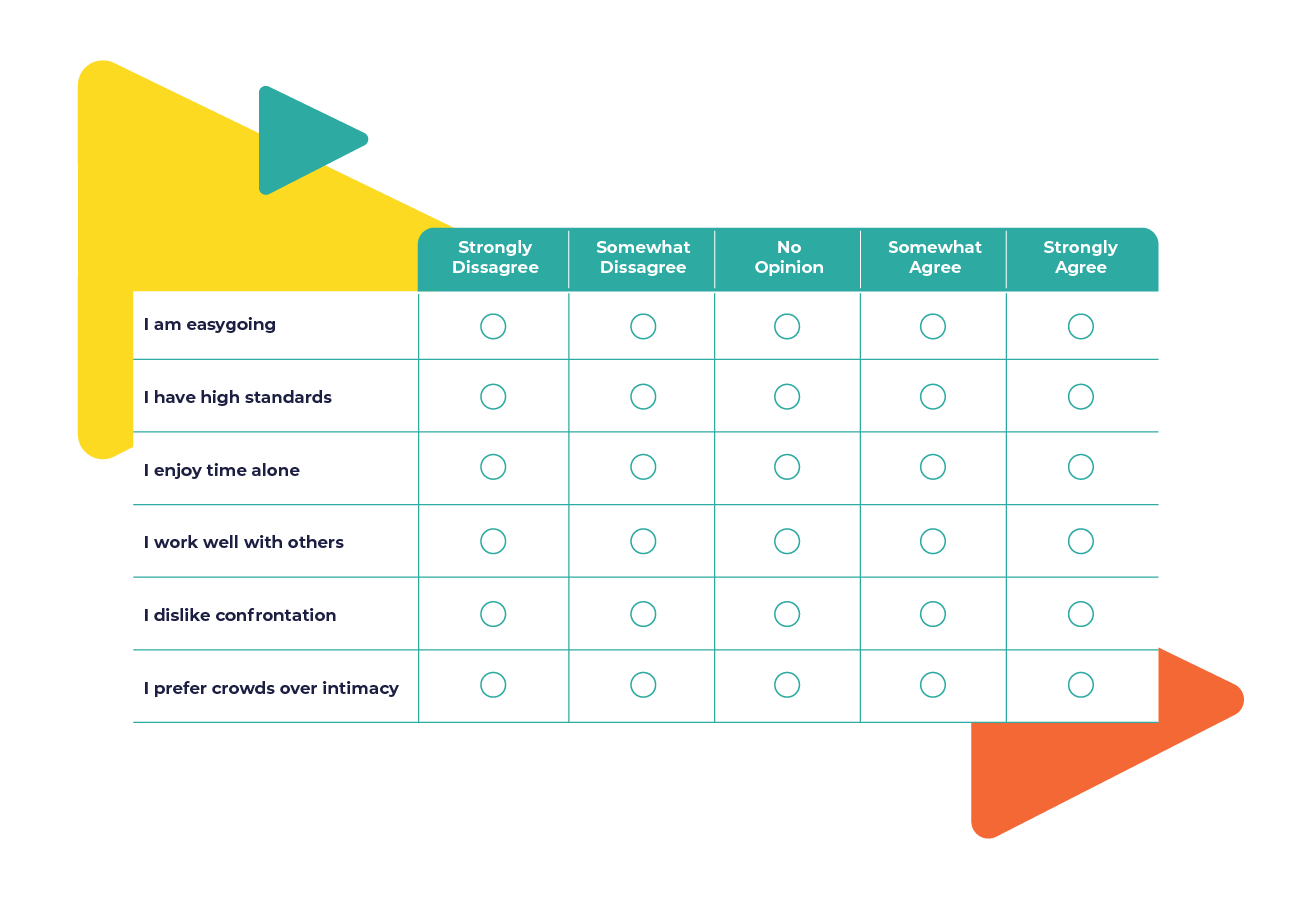

- Self-report: These ask candidates to describe their own personality. They’ll often present a statement, and then ask the candidate the extent to which they agree. For example: “I’m a naturally creative person”, or “I enjoy working closely with others”. These are effectively questionnaires, and candidates will normally complete them online today (though they existed for decades in paper format beforehand).

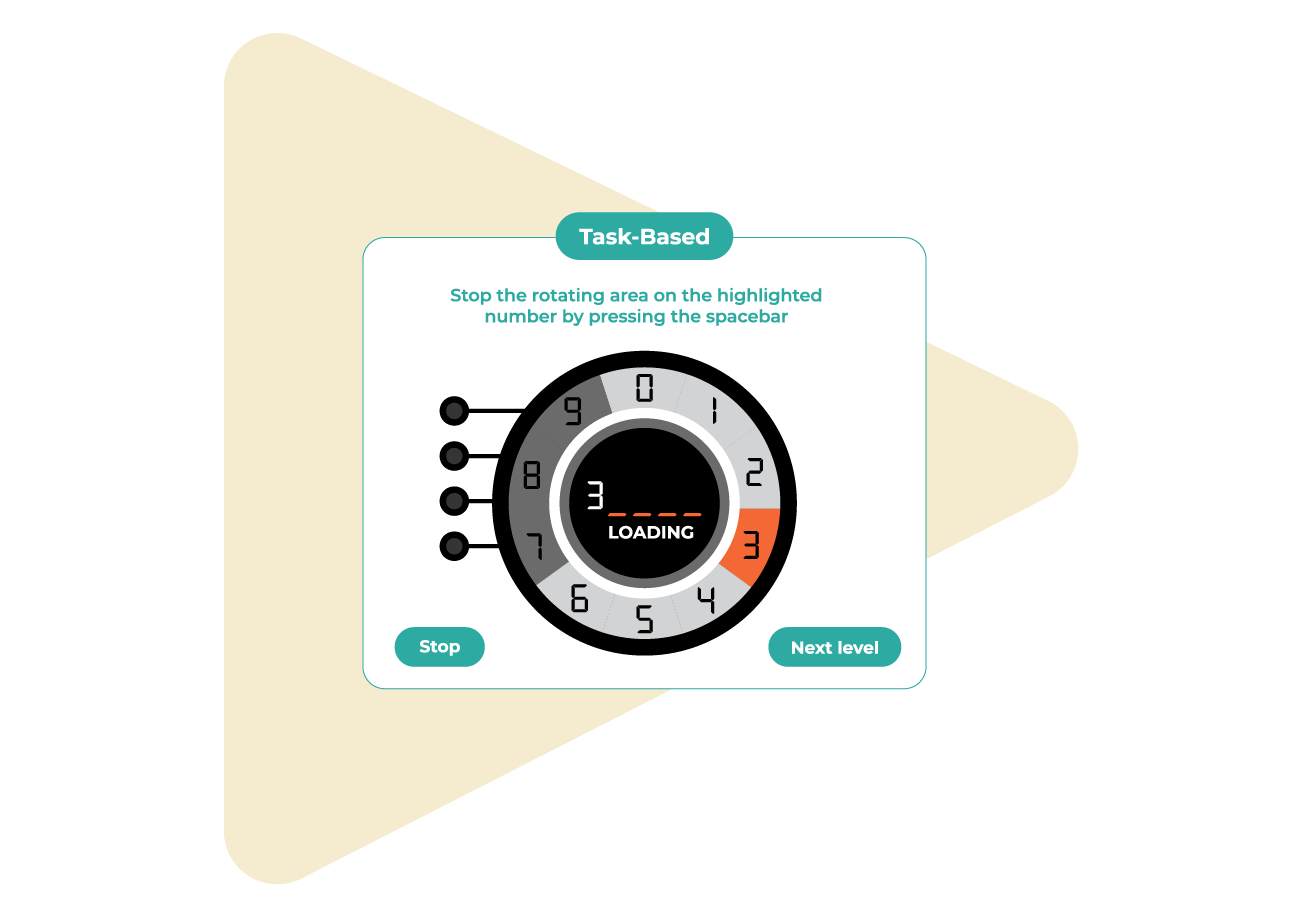

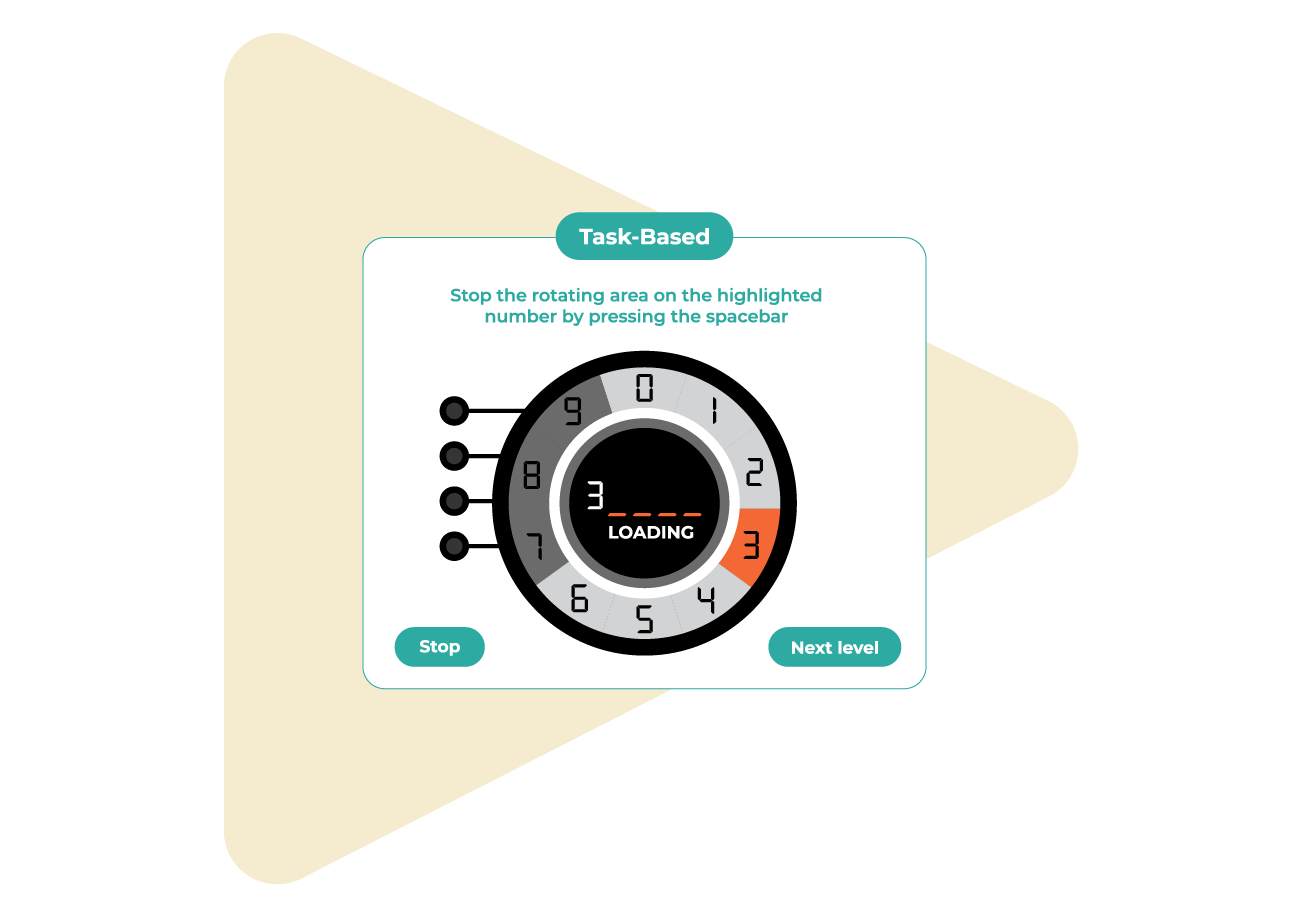

- Task-based: These don’t ask any questions or present any statements. Instead, they let candidates show their authentic behaviour by completing a series of intuitive tasks, based on neuroscientific research. This can be termed a ‘no-knowledge’ approach, as candidates aren’t required to really know anything about their own personality. As a result, these assessments are particularly useful for early careers screening, where candidates have little work experience to go on.

Hiring for Skill-enablers™

Simplify skills-based hiring, and find candidates who will thrive in the AI-enabled workplace, where role requirements change faster than ever.

Hiring for skill-enablers™ is the only way to predict candidates’ potential to continuously adapt, grow, and acquire new skills, and they’re based on decade’s worth of innovation and billions of data points captured (including data on subsequent workplace performance); as well as comprehensive industry analysis on predictions about future workforce requirements.

Psychometric test format

Psychometric tests most commonly come in the form of questionnaires, which candidates fill out within a set time period. While these had historically been in paper format, today they’re mostly completed online. This shift still represents arguably the most significant and widely-adopted innovation in the sector’s history.

Self Reporting

These tests generally present statements relating to the candidate’s characteristics, and encourage them to decide how applicable each statement is to them. This is called self-reporting. Such statements are often constructed to try and disguise what’s actually being assessed, so as to avoid faking or so-called ‘impression management’, where a candidate tries to paint a picture of themselves that’s more socially desirable. A typical example is ‘I regularly take different routes to work’, rather than ‘I’m very creative’.

While this form of assessment remains most common, the industry is also seeing the rapid rise of more progressive formats. These include interactive role-playing tasks or ‘game-based’ tests, designed to mitigate test anxiety and produce more authentic, work-relevant responses from candidates. All in the name of painting a clearer picture of their capabilities and suitability for a given role.

Added to this, research has shown that many of these traditional, question-based assessments are now highly vulnerable to AI-enabled candidates using ChatGPT and other AI (large language) models.

Task-based (like Arctic Shores)

Another way to capture a candidate’s personality, without self-report, is by measuring their natural behaviour in action. That’s what we do at Arctic Shores.

Assessments like ours use tasks that were first established decades ago in the field of neuroscience, bringing them to life in an engaging online experience. The tasks encourage your candidates to make thousands of tiny decisions in real time, with each tap, click and scroll revealing something that we can link to their unique personality.

There are no questions in this type of assessment – also known as game-based assessments or behavioural assessments. That means there’s no chance of candidates using ChatGPT or other LLMs to complete the assessment. And no room for recruiter bias.

And, speaking for our assessment at Arctic Shores, 90% of candidates say they enjoy the experience. Which means it’s often better for them, and better for you, too.

Innovation in the format of psychometric assessments

Arguably the biggest innovation in the industry in terms of format is the effective removal of the self-report, question/answer element. Some providers have instead identified ways to measure real candidate behaviour with tasks, by using interactive, game-like interfaces that identify thousands of data points on how candidates respond to specific stimuli in real time. These Task-based Assessments are often rooted in well-established neuroscience tasks, and so represent an effective blend of the tried-and-tested, and the truly innovative.

As we’ve noted, the most efficient and effective way to hire is to use data that can truly predict performance. By allowing you to see how candidates respond in work-relevant scenarios, rather than rely on self-reported data, Task-based Assessments can support more predictive and intelligent hiring.

It’s also worth bearing in mind their positive impact on candidate experience. We established in the previous section how self-report tests fail to induce any real cognitive flow, and instead mostly breed anxiety. Task-based Assessments are far better at encouraging intuitive responses, alleviating the feeling that they’re under the microscope. This allows for more authenticity in a less stressful environment.

Benefits of a task-based assessment

more application volume

better quality of candidates

reduction in attrition

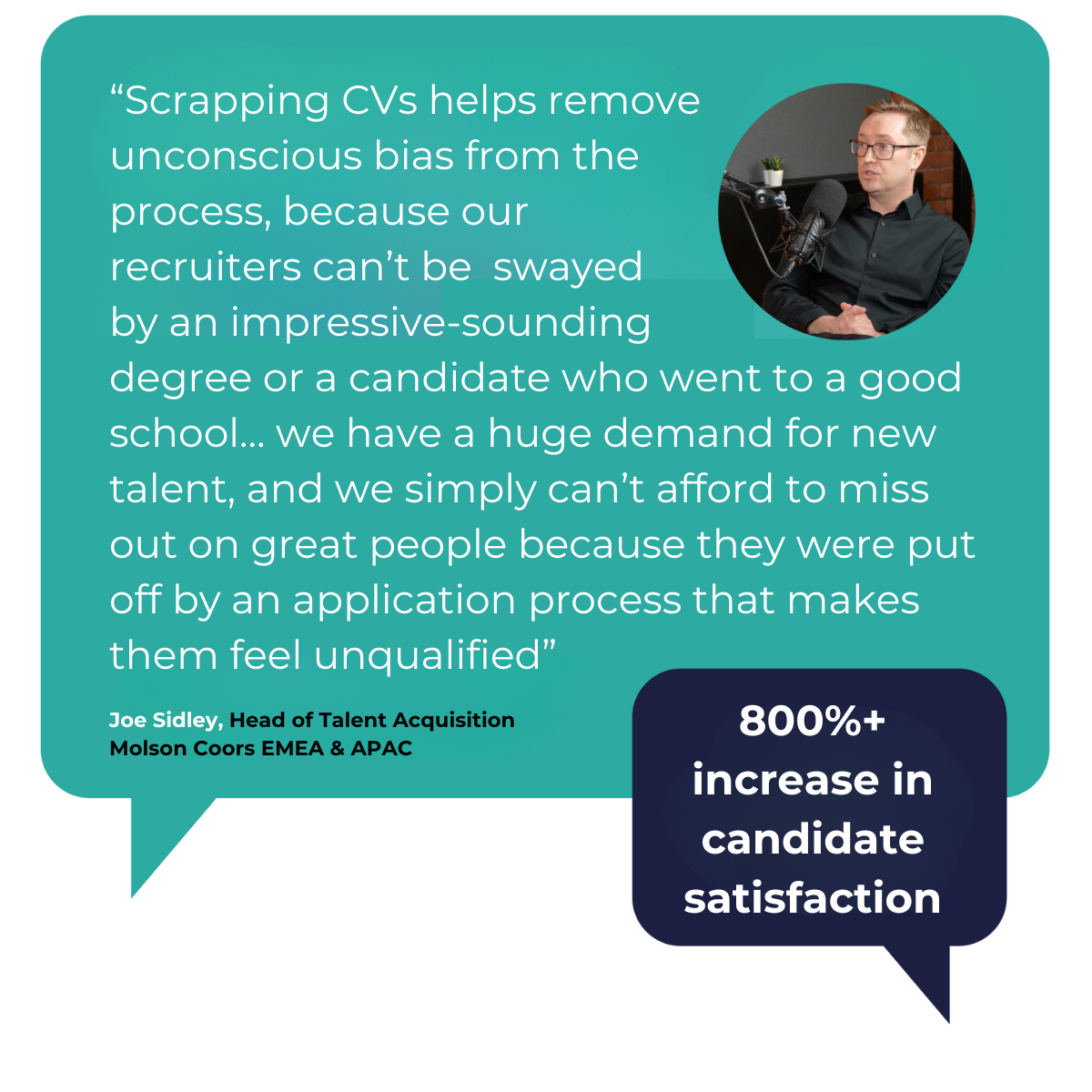

How Molson Coors adopted a pioneering approach to accelerate DEI mission

Head of Talent Acquisition at Molson Coors, Joe Sidley had overseen multiple initiatives to make hiring more inclusive. Bias workshops, gender decoding, blind CVs – they’d done it all and already moved the dial on inclusion. Molson Coors’s foundational value is ‘putting people first’.

But, to truly live up to that value, Joe wanted to do more. He wanted to try something truly innovative and push the boundaries of what an inclusive and equitable hiring process could look like across the UK and Ireland.

Find out how Molson Coors had 75% female representation at interview stage, 100% increase in applications and an 800%+ increase in candidate satisfaction.

Benefits of psychometric testing

During wartime, psychometric tests were ultimately pioneered to provide more data. This remains true, and is one of their core benefits. But it’s not the only reason you might be considering using them today. In modern times, growing awareness of challenges like bias and the cost of high turnover mean psychometric tests often have far more strings to their bow. Let’s look at some of the common advantages of psychometric testing.

Fast high-volume sifting

Even without UK employment hitting record highs, enterprises can still expect to receive thousands, if not hundreds of thousands of applications annually. And now, with the rise of auto-apply AI tools, we’re hearing of application volumes rising from 12,000 in 2023 to 45,000 in 2024.

That’s a lot of extra CVs for those in talent acquisition to consider, and so it makes complete sense to sift early in bulk based on candidates’ core strengths and abilities.

This is where psychometrics can be valuable. If you’re on the receiving end of a deluge of applications, psychometric testing can paint a quick, scientific picture of who meets your benchmark for suitability without the need for intensive labour on your part. This, in turn, can lead to shorter hiring processes and considerable cost savings.

Transparency

While CVs and interviews are often seen by candidates as ways to convey their strengths, employers also need to see their development areas. This can be difficult, however, given how it may seem to be in candidates’ best interests to hide them from you. Psychometric tests can help employers to see these growth areas more clearly, beyond candidates’ efforts to appear socially desirable. This allows you to establish a more complete, transparent picture, with likely development areas balanced alongside the more obvious strengths.

Predictability

The holy grail for anybody in talent acquisition would surely be the ability to predict the right hires before you make them. This is one of the advantages of psychometric testing that affects both the candidate and the employer:

Candidate

Candidates can in many instances not realise that they’re poorly suited to the role they’re applying for. Establishing the right fit beforehand saves them the frustration of feeling like a square peg in a round hole once they’ve started the job. They’re more likely to stay in a rewarding role that they feel suits them, whereas a poor fit may see them choose to quickly depart. This inevitably thrusts employers back into the talent market for that particular role.

Employer

If poorly-suited employees don’t leave of their own volition, there is likely to be a period where your internal resources are drained trying to bring them up to a certain standard. This means added stress for your other employees, tasked with supporting the new recruit. After this period often comes the realisation that job fit cannot be engineered, and so a replacement is required. The search process will need to be repeated, duplicating costs and effort. Previous investments made in training will also be lost.

The ability to predict suitable hires has the potential to save employers from this experience, thereby softening the blow of employee turnover to your annual budget.

Psychometric testing, in most cases, can give you the deeper understanding necessary to tell whether a candidate has what it takes to thrive in both the role and your organisation more generally (taking aspects like culture into account).

In this way, they can help you build on more traditional data sources like CVs and interview responses. These alone aren’t sufficient indicators of candidates’ suitability. Crucially, an overreliance on these (and on one’s ‘gut feeling’) can be a slippery slope to unsuitable, expensive hires.

Psychometrics can help ensure you never fall into this trap, and instead, provide a new layer of data with which to more accurately predict the right hires for you.

Improving Diversity

Significantly reducing hiring bias and promoting diversity are other benefits of implementing psychometric assessments into your hiring process.

Using interview responses as the main indicator of role suitability, for example, leaves you exposed to so-called homophily. That is, the biologically-hardwired tendency for humans to seek out and commune with people who look, sound and think like us. This can mean that, knowingly or not, hiring managers are more likely to hire those of their own ethnicity, gender, class or sexuality.

There’s also the halo effect, whereby a certain feature deemed positive (attractiveness or a particular school, for example) can blind hiring managers to a candidate’s development areas.

Psychometric tests have proven to strip out much of this bias and help prevent against the halo effect, improving the diversity of talent pools in the process. Diverse teams have been proven to have numerous different benefits. Not only does it serve a clear ethical purpose, but it also helps guard against the risk of all your employees thinking in the same way. By boosting so-called cognitive diversity, you’ll improve your team’s ability to solve problems and innovate.

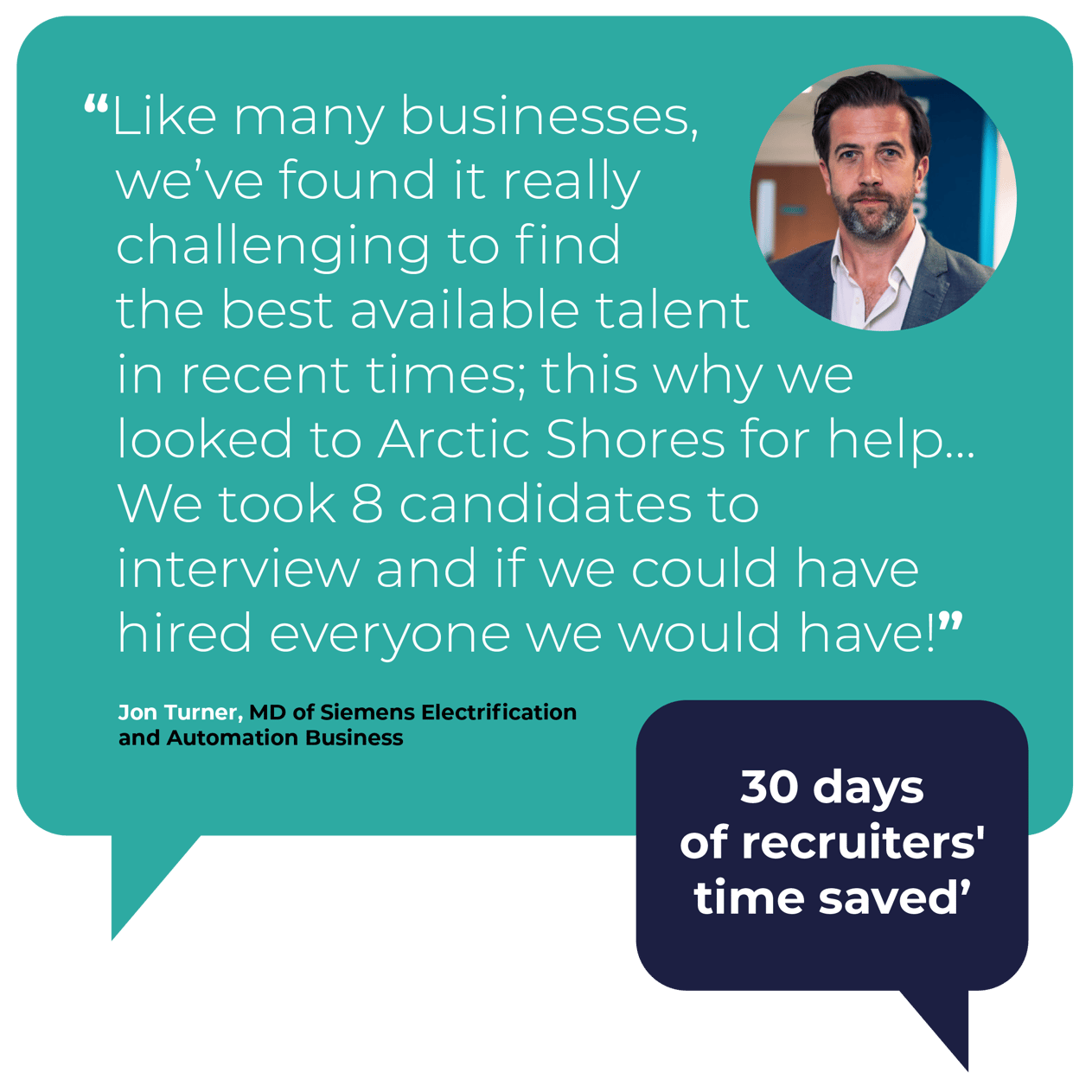

How Siemens used skills-based hiring to solve their skills crisis

Siemens is a global engineering and technology company transforming the world with purpose by combining the real and the digital worlds in addressing some of the world's biggest challenges.

The Siemens team wanted to do things differently and came to Arctic Shores to improve the hiring process for several business-critical Project Engineer roles. They had multiple vacancies for this type of role –– some of which had been open for over 200 days.

Find out how they increased applications by 542%, ended up with 50% female candidates at the final stage and saved 242 hours of manual screening.

Things to look out for with psychometric assessments

A psychometric test can be entirely valid, without necessarily pushing the envelope in terms of innovation. Of course, the reverse is also true – some tests, striving to stand out in a market now worth more than $2bn, might sacrifice validity for novelty. It’s up to you, in this growing field, to decide what works best for you and your needs.

It’s not enough to find an innovative assessment. To be certain of its likely value, you need to establish its reliability and validity.

Psychometric reliability relates to consistency of outcomes. If you’re asked what your favourite colour is, you’re likely to arrive at the same answer every time (making this question a reliable one). Another example would be a rubber ruler in a warm room. As it expands in the heat, the measurements will change, and your data could potentially differ each time. This lack of consistency makes the ruler an unreliable one.

Psychometric validity is slightly more tricky. Effectively, validity relates to the relationship between what a test says it measures and what is ‘real’. It’s an important aspect for you to establish to ensure your investment will actually have the desired positive effect. There are numerous types of validity, some more rigorous than others.

Join 150+ leading employers uncovering true potential with UNA

How do you prepare for a psychometric test?

Most assessment providers say that the best way to prepare for psychometric assessments is practice. Lots and lots of practice. But this always ends up favouring candidates from certain backgrounds, who can access more training, and inevitably get more comfortable with test anxiety. For the most common types of psychometric assessment (i.e. self-report), this might be the case; but we like to do things a little bit differently.

That’s why our assessment doesn’t require any preparation with practice psychometric tests or other methods to score highly. Because we’re looking at your candidates’ potential – their unique qualities – rather than testing them.

That said, there are a few things your candidates can do to give themselves the best chance to prepare for our answer to psychometric testing and show their potential:

Check the connection

Read the instructions

How do you pass a psychometric test?

As we mentioned in the previous section, learning how to prepare and pass a psychometric test really depends on the type you’re undertaking. For more traditional formats, normal tips and tricks apply. Researching what format might be used and finding practice psychometric tests online, understanding what the employer wants in a candidate, not rushing questions and generally reading around the subject are all good places to start.

Learning how to pass the Arctic Shores assessment is much easier - because you can’t!

One of the great things about our assessment is how it sees right to the heart of your candidates’ unique qualities. But it also sees when they’re trying to be someone they’re not. Candidates who use guides off the internet to try and ‘beat’ our assessment rarely progress, if ever. So it pays to be for candidates to be themselves – that’s all we’re looking for.

The ultimate guide to managing candidates' use of GenAI

This practical guide gives context, advice and templates to help you understand how to define and communicate your position on candidates' use of GenAI.

It’s curated from best practices in the TA Disruptors community and insights from the likes of Siemens, HelloFresh, The Institute of Student Employers, the Government Skills and Curriculum Unit, UCAS, and many more.

What can candidates expect from the Arctic Shores assessment?

Well, firstly, let’s start with what they shouldn’t expect:

- Hundreds of thinly veiled questions about their behaviour

- Overwhelming test anxiety

- A total lack of feedback

With most psychometric assessments, these would be par for the course. But our assessment’s a bit different. Okay, a lot different. Here’s what your candidates can expect from it:

Tasks, not questions

There are no questions in our innovative take on a psychometric assessment. Instead, we’ve built a series of intuitive, engaging tasks that measure their unique qualities in action.

There’s no right or wrong with the tasks. All your candidates need to do is approach them as naturally as possible – although, because they never really know what’s being measured at any one time, it’s incredibly hard to fake anyway.

Instant feedback

Once they’ve completed the assessment, we’ll automatically send them a feedback report detailing the qualities they showed.

To create the report, we compare their qualities to those of the general population, by putting every candidate in the wider context of a ‘norm group’. Because most people see their qualities in the context of their immediate group of friends and family, norm groups make sure their feedback is as real and accurate as possible.

Psychometric testing in recruitment

Recruiters are increasingly relying on psychometric testing to choose which candidates to progress to interview. They’re used by about 80% of Fortune 500 firms today, while our research suggests they’re also used by around half of all medium-sized employers.

Psychometric tests are popular for all sorts of reasons. Firstly, they’re designed to make your hiring process fairer, by going beyond the CV to create a level playing field for all candidates. By helping employers put aside things like education, employment history, age or ethnicity, they help employers build more diverse, dynamic teams. They’re also intended to support more data-driven, informed, predictive hiring decisions. That means faster processes, lower costs and less turnover.

All this means that the right psychometric assessment can paint a richer picture of every candidate, helping you gauge real potential before you hire. Taken together with other data – interview responses, for example – they’ve been proven to lead to better hiring processes. Getting the most out of psychometrics, though, comes down to your goals. And, importantly, matching those goals to the right assessment.

Where do psychometric tests fit into the hiring process?

Both aptitude tests and personality assessments tend to be used early in a process to support faster, fairer and more accurate screening. For this reason, they’re particularly common for companies attracting high volumes of candidates.

Using these types of psychometric assessments early on means you can feel more confident you’re progressing the right people to the latter stages of your process. We’ve seen that they can make a real difference to assessment centre pass rates, for example, simply by progressing high-quality candidates that would otherwise slip through the net.

That said, they can also be used later in the process, perhaps as an additional data point to inform hiring managers’ approach to interviews. This is particularly relevant for Task-based Assessments. This is for two reasons:

- They can capture cultural fit, as well as job fit. So hiring managers can bring a second data point to bear so that they progress the right people.

- Task-based Assessments can pick out behavioural development areas, which can be probed more deeply at interview. This means a more personalised, insightful approach to finding the right people.

Which industries are psychometric tests suitable for?

In short, psychometric testing is suitable for all industries. We’ve already seen how widely they’re used, whether that’s Fortune 500 giants or medium-sized employers. Any company that runs Early Careers, graduate or high-volume hiring campaigns will benefit from this approach. Especially in the age of the AI-enabled candidate, where rising application volumes are making manual CV-screening untenable. That said, some of our most successful customers can be found in:

Professional services

Financial services

Technology

HR & outsourcing

Engineering, design & manufacturing

Automotive

Government agencies & emergency services

Why the Arctic Shores assessment is different

The Arctic Shores assessment uses a Task-based format which allows you to capitalise on all the great work currently ongoing in neuroscience and psychology. Advancements here are seeing new constructs created, as well as faster, more effective ways to measure existing ones. In this sense, our assessment offers you the potential to capture the full range of candidate behaviour.

How we measure is different

Arctic Shores is unique in offering an assessment with no text inputs. That’s because text-based measures of candidate potential (CVs, cover letters and question-based psychometric assessments) can now be completed by AI with no specialist training. Peer-reviewed research shows that ChatGPT:

- Outperforms 98.8% of human candidates in verbal reasoning tests

- Scores in the 70th percentile on Situational Judgement Tests and above the 70th percentile in the Watson Glaser Critical Thinking Test.

- Aces question-based Personality Assessments for any role, by simply reading the job description

Our Task-based Assessment replaces text with interactive tasks, which are proven to be immune to AI models.

Instead of asking candidates to tell us how they think and behave at work, our assessment asks them to show us. Instead of using a self-report questionnaire, we deploy a series of engaging and dynamic tasks, anchored in validated neuroscientific studies.

Candidates complete our tasks from their laptop, phone or tablet. 95% of candidates enjoy the Arctic Shores assessment and say it reflects positively on the employer.

But as well as rethinking how we assess AI-enabled candidates, we also have to consider what to measure in an AI-enabled workplace.

What we measure is also different

While most assessment providers have pivoted to assessing some form of ‘skill’, research shows that the shelf life of many hard skills is now around 2.5 years. This means selecting based only on existing hard and/or soft skills is not the best way to identify candidate potential to adapt, learn, and grow with you over time.

That’s why Arctic Shores assesses candidates' Skill-enablers™ – the core strengths and abilities that enable a person to acquire new skills and to succeed in the role. We assess candidates based on three families of Skill-enablers™ – thinking style, interaction with others, and self-management.

If you evaluate Skill-enablers™ and hire on that basis, you ensure that future employees will be able to learn, re-learn and adapt with you, even if they don’t have the exact checklist of skills you need today. This ensures the longevity of new hires and also opens access to bigger, more diverse talent pools.

This approach is grounded in robust analysis of three data sets:

- Meta-review of research from industry bodies and analysts

- A detailed competency framework analysis

- Analysis of billions of data points gathered from candidates taking our assessment

We believe this is the biggest innovation in psychometrics since the introduction of the Big 5 Factor model. Not least of all because the Skill-enabler™ model overcomes one of the biggest challenges in psychometrics: assessing personality and cognitive ability together. This means that employers can now view the whole candidate in one assessment: further bolstering their predictive power and improving the candidate experience, while only paying for a single assessment.

We’ve touched on how our assessment’s a little different from the rest, in that it measures your candidates’ natural behaviour with no questions at all. If you dare to be a little different, too, then you’ll soon start seeing more in people.

Time to learn a bit more about psychometric testing or the Arctic Shores assessment?

We know the world of psychometrics can seem like a complicated place. That’s why it’s always best to chat through your options before taking the plunge!

So, if you’d like to take a closer look at our behaviour-based assessment here at Arctic Shores, or you’ve got any questions at all, we’d love to chat. Just get in touch here.

You can also download our Playbook for CV-less hiring to find out how hiring for potential can transform your hiring process.

The TA Disruptors Podcast

This podcast is designed for disruptive Talent Acquisition leaders who want to get ahead. For the innovators, the movers and shakers, and the TA leaders who will survive and thrive in this new era by moving quickly to future-proof their recruitment processes TA leaders are some of the hardest-working folks we know, so even if you’re overworked or overwhelmed, we’ll help you keep up.